Matlab enables a variety of ways to define callbacks for asynchronous events (such as interactive GUI actions or timer invocations). We can provide a function handle, a cell-array (of function handle and extra parameters), and in some cases also a string that will be eval‘ed in run-time. For example:

hButton = uicontrol(..., 'Callback', @myCallbackFunc); % function handle hButton = uicontrol(..., 'Callback', {@myCallbackFunc,data1,data2}); % cell-array hButton = uicontrol(..., 'Callback', 'disp clicked!'); % string to eval

The first format, function handle, is by far the most common in Matlab code. This format has two variant: we can specify the direct handle to the function (as in @myCallbackFunc), or we could use an anonymous function, like this:

hButton = uicontrol(..., 'Callback', @(h,e) myCallbackFunc(h,e)); % anonymous function handle

All Matlab callbacks accept two input args by default: the control’s handle (hButton in this example), and a struct or object that contain the event’s data in internal fields. In our anonymous function variant, we therefore defined a function that accepts two input args (h,e) and calls myCallbackFunc(h,e).

These two variants are functionally equivalent:

hButton = uicontrol(..., 'Callback', @myCallbackFunc); % direct function handle hButton = uicontrol(..., 'Callback', @(h,e) myCallbackFunc(h,e)); % anonymous function handle

In my experience, the anonymous function variant is widely used – I see it extensively in many of my consulting clients’ code. Unfortunately, there could be a huge performance penalty when using this variant compared to a direct function handle, which many people are simply not aware of. I believe that even many MathWorkers are not aware of this, based on a recent conversation I’ve had with someone in the know, as well as from the numerous usage examples in internal Matlab code: see the screenshot below for some examples; there are numerous others scattered throughout the Matlab code corpus.

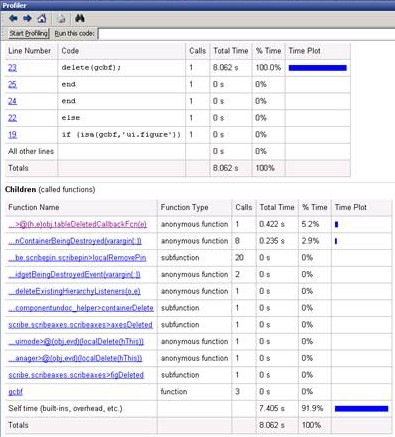

Part of the reason for this penalty not being well known may be that Matlab’s Profiler does not directly attribute the overheads. Here is a typical screenshot:

Profiling anonymous callback function performance

In this example, a heavily-laden GUI figure window was closed, triggering multiple cleanup callbacks, most of them belonging to internal Matlab code. Closing the figure took a whopping 8 secs. As can be seen from the screenshot, the callbacks themselves only take ~0.66 secs, and an additional 7.4 secs (92% of the total) is unattributed to any specific line. Think about it for a moment: we can only really see what’s happening in 8% of the time – the Profiler provides no clue about the root cause of the remaining 92%.

The solution in this case was to notice that the callback was defined using an anonymous function, @(h,e)obj.tableDeletedCallbackFcn(e). Changing all such instances to @obj.tableDeletedCallbackFcn (the function interface naturally needed to change to accept h as the first input arg) drastically cut the processing time, since direct function handles do not carry the same performance overheads as anonymous functions. In this specific example, closing the figure window now became almost instantaneous (<1 sec).

Conclusions

There are several morals that I think can be gained from this:

- When we see unattributed time in the Profiler summary report, odds are high that this is due to function-call overheads. MathWorks have significantly reduced such overheads in the new R2015b (released last week), but anonymous [and to some degree also class methods] functions still carry a non-negligible invocation overheads that should be avoided if possible, by using direct [possibly non-MCOS] functions.

- Use direct function handles rather than anonymous function handles, wherever possible

- In the future, MathWorks will hopefully improve Matlab’s new engine (“LXE”) to automatically identify cases of

@(h,e)func(h,e)and replace them with faster calls to@func, but in any case it would be wise to manually make this change in our code today. It would immediately improve readability, maintainability and performance, while still being entirely future-compatible. - In the future, MathWorks may also possibly improve the overheads of anonymous function invocations. This is more tricky than the straight-forward lexical substitution above, because anonymous functions need to carry the run-time workspace with them. This is a little known and certainly very little-used fact, which means that in practice most usage patterns of anonymous functions can be statically analyzed and converted into much faster direct function handles that carry no run-time workspace info. This is indeed tricky, but it could directly improve performance of many Matlab programs that naively use anonymous functions.

- Matlab’s Profiler should really be improved to provide more information about unattributed time spent in internal Matlab code, to provide users clues that would help them reduce it. Some information could be gained by using the Profiler’s

-detail builtininput args (which was documented until several releases ago, but then apparently became unsupported). I think that the Profiler should still be made to provide better insights in such cases.

Oh, and did I mention already the nice work MathWorks did with 15b’s LXE? Matlab’s JIT replacement was many years in the making, possibly since the mid 2000’s. We now see just the tip of the iceberg of this new engine: I hope that additional benefits will become apparent in future releases.

For a definitive benchmark of Matlab’s function-call overheads in various variants, readers are referred to Andrew Janke’s excellent utility (with some pre-15b usage results and analysis). Running this benchmark on my machine shows significant overhead reduction in function-call overheads in 15b in many (but not all) invocation types.

For those people wondering, 15b’s LXE does improve HG2’s performance, but just by a small bit – still not enough to offset the large performance hit of HG2 vs. HG1 in several key aspects. MathWorks is actively working to improve HG2’s performance, but unfortunately there is still no breakthrough as of 15b.

Additional details on various performance issues related to Matlab function calls (and graphics and anything else in Matlab) can be found in my recent book, Accelerating MATLAB Performance.

One thing with the modified code is that it is necessary to add an attachment to send an...

One thing with the modified code is that it is necessary to add an attachment to send an...

In your example, you used @obj.tableDeletedCallbackFcn instead of @(h,e) obj.tableDeletedCallbackFcn. But elsewhere on the site; in your MATLAB Performance book; and on the SO page you showed, it’s faster to do, for instance, tableDeletedCallbackFcn(obj).

So I have an awful lot of code where I set up callbacks as such:

So would you actually get better performance this time using obj.myCallback instead of myCallback(obj)?

@Clayton – when directly invoking a function, obj.tableDeletedCallbackFcn is indeed typically slower than tableDeletedCallbackFcn(obj). However, with callbacks you must specify a function handle and so the only alternatives are to either use @obj.tableDeletedCallbackFcn or an anonymous function (which is much slower). Anonymous functions, as of R2015b, are an order of magnitude slower than any other function invocation alternative.

In summary,

@obj.myCallbackis equivalent to, but faster, simpler and more maintainable than@(h,e) myCallback(obj, h, e).Note also that there’s a subtle difference between obj.myCallback() and myCallback(obj). The former explicitly states that a method of obj should be called (MATLAB won’t search anywhere else to find a myCallback). In contrast, the latter version could call a regular function or a method of obj. So I wouldn’t recommend switching between them blindly, even if the result is very likely to be the same.

If multiple arguments were involved, like in myCallback(a, b, c), and arguments had different type, there would be even more possibilities for what gets called. E.g., if c had a higher precedence than the other two arguments, then the method from the class of c would be picked, if available. (See the InferiorClasses attribute used with classdef and also “Class Precedence” in the MATLAB documentation. The concept is called multiple dispatch, which is not a very common programming language feature, but makes a lot of sense in an environment geared towards scientific computing.)

These subtleties are probably not very relevant in the context of callbacks, but it is good to be aware of them nonetheless.

One more note about the performance implications of changing @(h, e) obj.tableDeletedCallbackFcn(e) to @obj.tableDeletedCallbackFcn (example from the article):

I fail to see why this should improve performance at all. My doubts stem from the fact that the latter looks like a simple function handle, but in fact turns out to be an anonymous function handle. MATLAB R2015b prints: @(varargin)obj.tableDeletedCallbackFcn(varargin{:}). So this only saves us from having to write out the arguments. And calling functions (a function that offers a glimpse at the internal representation of function handles) on that also confirms the type to be “anonymous”. From a conceptual point of view it has to be that way, because anonymous function handles are the only type capable of storing some workspace alongside the function handle. And, well, there’s this obj that needs to be stored somewhere.

I have to admit, that I haven’t benchmarked theses different versions, though. So maybe structurally there should be no big difference, but in fact there is.

I finally ran a simple benchmark (on OS X 10.10.5) to support my argument. It also very nicely shows the performance improvements in R2015b.

In the benchmark

objis an instance of a custom polynomial class andxis a double scalar. The work done in theplusmethod is minor, so the performance is dominated by the function/method call overhead. Every run consists of a thousand invocations of the “function handle” created before the loop.Results after 10 runs (in 84.13s) on MATLAB R2015a:

Results after 10 runs (in 47.81s) on MATLAB R2015b:

The last line was initially a silly experiment, that turned out to be surprisingly fast. It is a class that holds an object instance and a function handle and overrides the

subsrefmethod to provide a function-handle-like interface. In the endMethodHandle(obj, @method)should behave like@(varargin) method(obj, varargin{:}).You are missing one important variant, that I expect to be the fastest of all:

@pluswhereplus(obj,x)is a sub-function in the same class file as the main class, but implemented as a separate sub-function rather than a class method. This avoids the two most important method-invocation performance hotspots: anonymous functions and class methods. For any performance-critical code that is repeatedly invoked in class methods, I often use such sub-functions (or standalone procedural functions).@Yair – there are probably many variants I haven’t tested. If I have a function used internally by a single class, then I fully agree with you. However, the exercise here was to create a function handle that forwards its arguments to a method of a class while passing an instance of that class as the first argument. And I fail to see how your suggestion could be used in this scenario, or am I misunderstanding you?

I’m using GUIDE for creating GUIs, and by default they do this for callbacks:

I tried changing this to

@button_name_Callback, but it seems that where it’s evaluating the callbacks, functions likebutton_name_CallbackinsideMain_Programare out of scope. Is this a limitation of GUIDE, or do you know if there some way I can make this work?@Chris – this is a limitation of GUIDE. You can modify the callbacks programmatically in your GUIDE-generated m-file (Main_Program.m), for example in the OpeningFcn(). In this function, all the other subfunctions of the m-file are in scope and so you can directly set the relevant callbacks to their direct function handles.

I’ve tried to adapt the function callback for my GUI designed with GUIDE. I’ve adapted

to

@testListBox1_Callbackinside of my main code.

But in my case it won’t work to get the Callback faster. Can you help?

Alex

@Alex – there is no immediate answer. If you want me to look at your specific code, then email me (altmany at gmail) for a dedicated consulting session.

While the obj.function vs function(obj) times are almost identical for Matlab code (see i.e. the discussion here: https://stackoverflow.com/a/1745686/3620376), it’s not solved for called .NET (C#) code. The results were quite clear, in my case 35.57 seconds (Net 4.5 obj.nop()) vs 8.72 seconds (Net 4.5 nop(obj)).

I’ve made an update to Andrew Janke’s repo at https://github.com/apjanke/matlab-bench to test that.

.

Even more interesting is the fact, that a event callback, thrown in .NET and caught in Matlab is slower when it’s an pure function handle. It’s marginal but visible and contrary to your example above, where you state, that all anonymous function calls should be replaced by non-anonymous ones if possible.

.

‘DataIsReady’ is a .NET Framework 4.5 (C#) event. I’m at Matlab 2019b @ windows 10.

vs

Profiler times:

…j.onSerialBytesAvailableNet(src,evnt) 40807 8.369 s 0.220 s

…nSerialBytesAvailableNet(varargin{:}) 38265 8.407 s 0.347 s

Looks like the addlistener calls the function with varargin{:} when a non-anonymous function is used. I don’t know if that’s special for .NET events or default, but it’s more than 50 % slower in my case.

I know, there is a big number of function calls, and probably not worth mentioning or impacting performance but just figured that out and wanted to hear you comments

Thanks